One of the first hurdles one has to overcome in achieving the CCIE - EI is getting access to a proper lab environment. This post covers how I have solved this for the CCIE EI v1.1.

Hardware & Software

The new equipment and software list has some major changes. The most important changes are as follows:

- The DNAC is bumped to a much improved v2.3

- The DNAC is now a lot easier to run virtualized!

- The vEdges are fully replaced by cEdges

- The CSR 1000v is replaced by the C8Kv

Virtual machines

These images are included in Cisco Modeling Labs:

- Cisco Catalyst 8000V Routers with Cisco IOS XE Software Release 17.9

- Cisco IOSv with Cisco IOS Software Release 15.8

- Cisco IOSv-L2 with Cisco IOS Software Release 15.2

I experienced stability issues with all currently available 17.9 versions of the Cat8kv in controller mode. IOS-XE 17.6 proved to be far more reliable, and doesn’t miss anything crucial to the blueprint AFAIK. The most recent IOSv-L2 image (2020) supports a lot of things, but it doesn’t do L3 portchannels too well, stick to SVIs.

Depending on your org these should be available for download through the regular Cisco downloads page:

- Cisco SD-WAN (vManage, vBond, vSmart, cEdge) Software Release 20.9

- Cisco DNA Center, Release 2.3

Depending on when you’re reading this, you should be able to get access to the DNAC-VA image for lab purposes by ordering it without a service agreement(might require partner status). Refer to the Catalyst Center VA FAQ, it currently reads “Simply place a $0 purchase order on Cisco Commerce using the Cisco PID: DN-SW-APL”. The DNAC-VA 2.3.7.3 is the most recent version at the time of writing, this is rock solid and I don’t see any good reason to use the hardware appliance over it at this point.

Physical Equipment

- Cisco Catalyst 9300 Series Switches Release 16.12

It is preferrable to lab the SDA portion on physical equipment. The currently cheapest option for physical equipment is to go for used Catalyst 3850 switches. If you work for a partner you should be able to get them for a nice price from Cisco Refurb with NFR discounts.

These switches can soon be fully replaced by the Cat9kv, but it is currently not quite there. You should use the 17.13 image if it is available to you(not supplied in CML). In it’s current state the image unstable and many features aren’t working as expected(LAN auto and MAB for example), if you run into issues you should try to boot the node. Wherever possible you should use the Catalyst 8000v for control-plane and border nodes. It is faster, more stable and as a nice bonus it requires less resources.

Supporting virtual machines

- Cisco Identity Services Engine 3.1

- Linux Desktop

The CCIE - EI host VM for 1.0 is available for download on the Cisco Learning Network. I have no knowledge if this is still the image used in the lab, but I don’t expect that they have change the most important tools. I am hence still using this for all “computer” nodes in my lab.

Based on the CCIE - EI Learning Network webinars the desktop environment will be Xubuntu 18.04. This as available as an OVA from osboxes.org or as an iso from Xubuntu.org. I went the ISO route and a ‘minimal installation’.

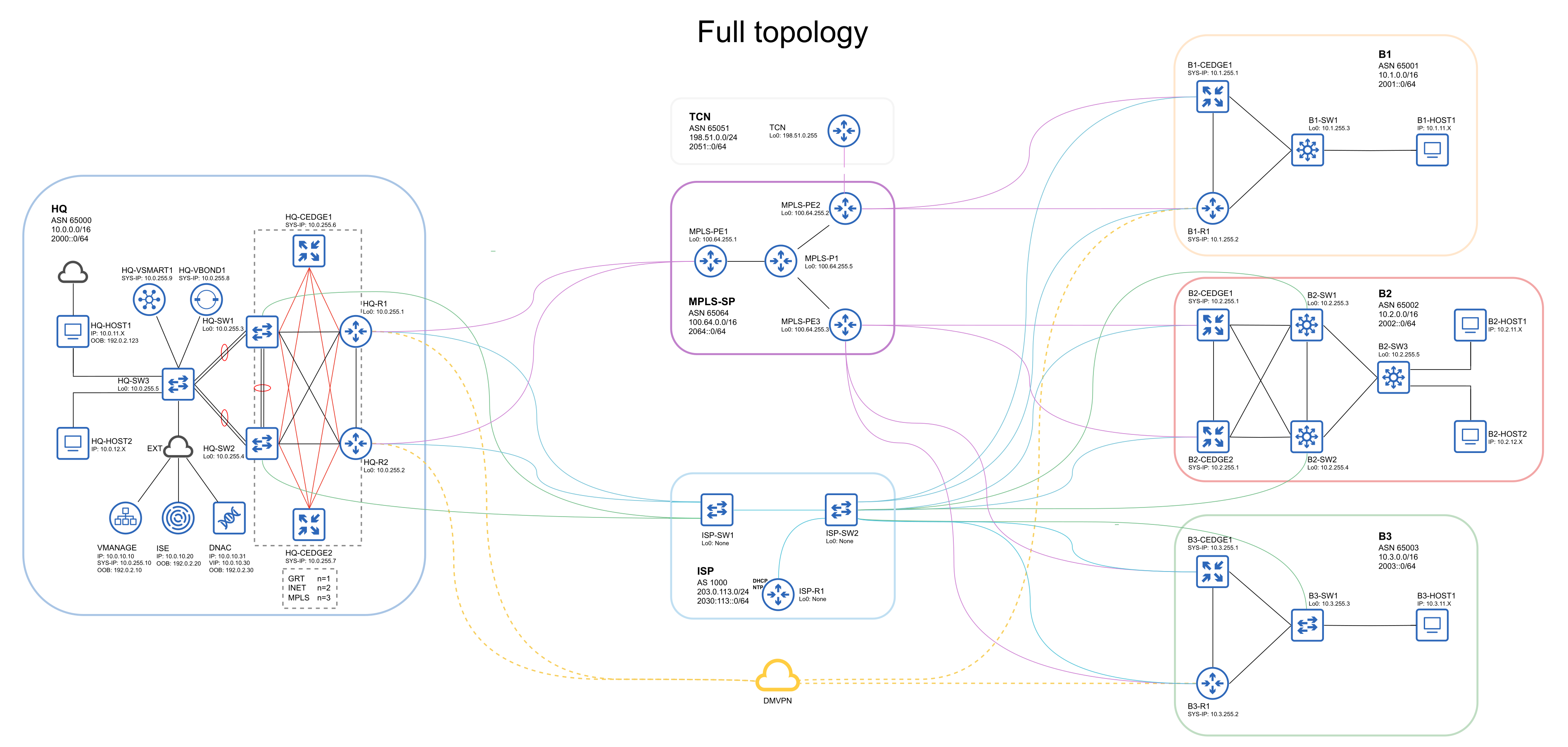

Lab topology

Due to the new blueprint and heavier nodes than in v1.0 I have had to change my lab topology away from the one presented in the 1.0 webinars. This topology is however inspired by the topology presented in the webinars, which I believe is the work of Peter Palúch. I have put quite a lot of thought into how I should build up my lab such that I can still do “everything” without modifying the topology.

When labbing I keep a few copies of the full topology available to write/think on and a copy of the detailed documentation. You can download the documentation in PDF format here.

Once I find the time to clean them up I will publish my EVE-ng and CML lab files. I have a tendency to use profanity for naming things in the lab - which would be innapropriate to publish. If you can handle some strong norwegian vocabulary and want the files before then, get in touch!

Spinning it up

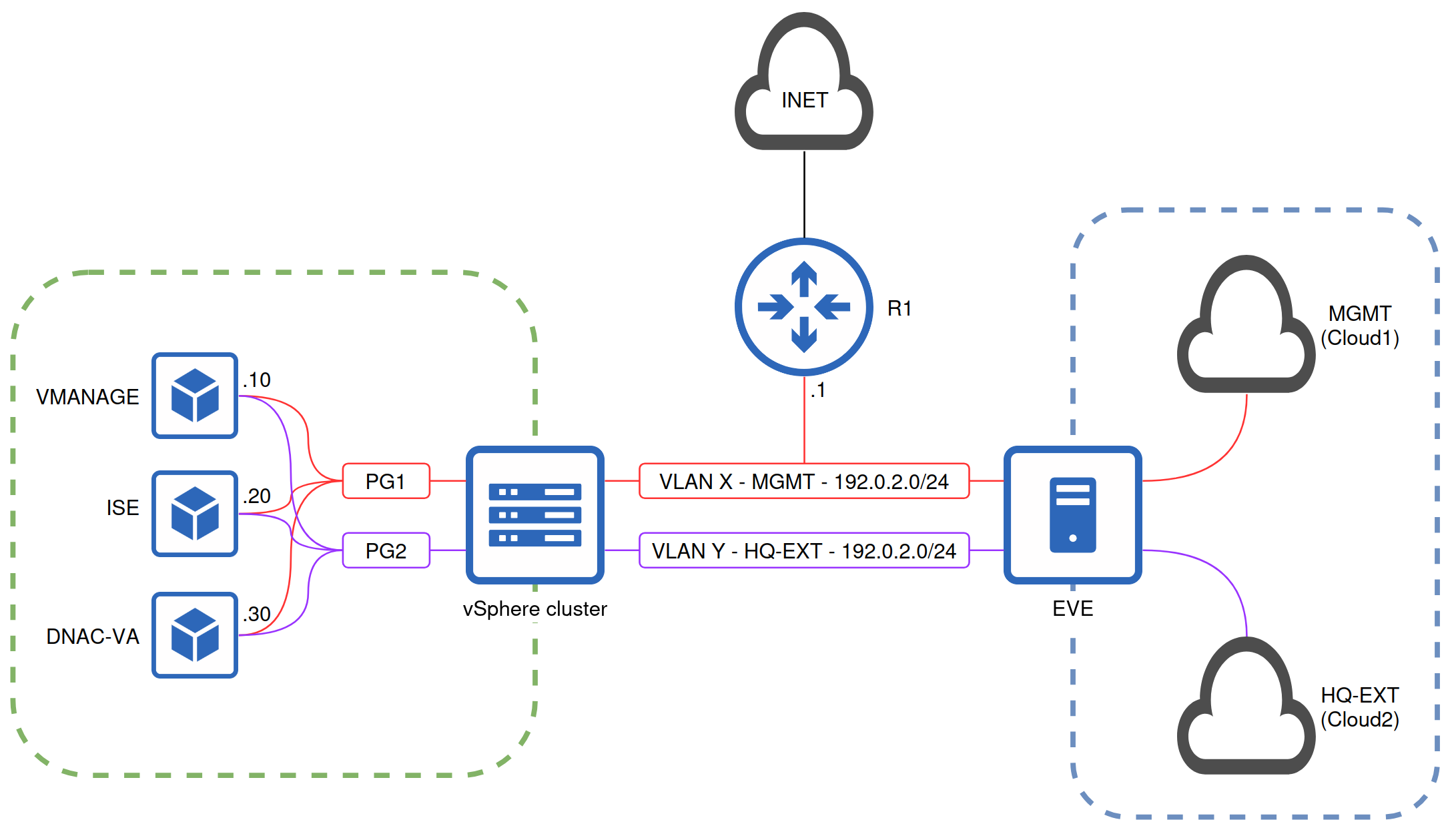

For practical reasons I keep all config-loadable nodes in EVE, and all others in VMware. This way I save some resources on my EVE-ng host and can utilize snapshotting/cloning to “reset” those nodes when I need to. I have glued my lab environment together like this:

I run EVE-ng bare-metal on a 40 core CPU, 256GB memory Dell R720. This is barely able to run the topology above. If you are investing in hardware for this I would go for a Gen 13, or ideally Gen 14 server if your budget allows it. You should stick to Intel CPUs as some CML nodes apparently doesn’t work well on AMD.

I am able to run the VMware hosted nodes on a single ESXi host of the same spec with some room to go. To be able to overprovision the DNAC VA you can untar the OVA file, edit the containing OVF file to remove all reservations and recreating the OVA file. Note that the DNA Center virtual appliance consumes very little resources after booting. It does however consume all resources it is allocated while booting, so avoid booting multiple nodes at the same time.

If “pay as you go” pricing is preferrable you should be able to run this on an AWS m7i.12xlarge instance, there are guides as to how to run EVE-ng on AWS online. The DNAC VA and ISE nodes are also possible to run for a reasonable price on AWS.

When using physical equipment for SDA I found it best to replace all fabric devices in branch 2. I added the Gi0/management interfaces of all physical devices in the management VLAN and created 4 VLANs and/or EVE-ng “clouds” for link-nets and the client networks. I had a hard time getting 802.1x to work when virtualizing the client machine NICs, a better alternative is to host these clients in VMware and pass through the physical NICs attached to the edge-switches.

Summary

Availability of the necessary hardware and software has gotten a lot better since the last time I wrote this post. There are also multiple reputable CCIE EI rack rental providers available, including Cisco themselves through their practice labs.

Good luck in your preparations!

See Also

Got feedback or a question?

Feel free to contact me at hello@torbjorn.dev